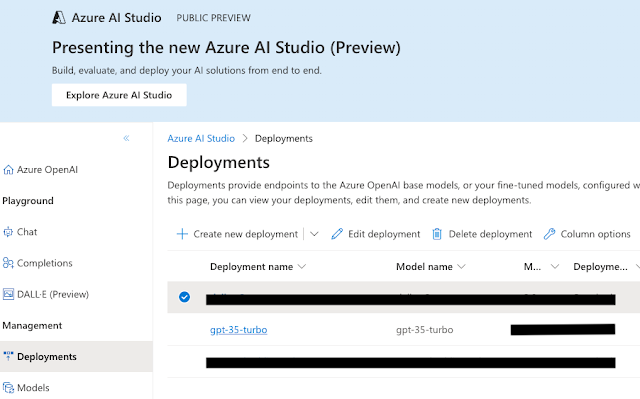

In this article I will experience how one can use generated C# code in "Azure OpenAI Studio" to create your own application. Of course, it is assumed that you have an Azure subscription and access to Azure OpenAI.

Getting Started

Go to https://oai.azure.com.

Let us play in the “Chat playground”. Click on “Chat playground”.

You are an AI assistant that helps people with baking.

Give me an easy recipe for baking a loaf of bread that takes about 30 minutes preparation and about 40 minutes in the oven.

Certainly! Here's a simple recipe for baking a loaf of bread with a total time of approximately 1 hour and 10 minutes:

Add the salt and olive oil to the yeast mixture, and gradually add the flour, one cup at a time. Stir well after each addition until a shaggy dough forms.

Once the dough starts to come together, transfer it onto a lightly floured surface and knead for about 5-7 minutes until it becomes smooth and elastic. If the dough is too sticky, you can add a little more flour as needed.

Preheat your oven to 375°F (190°C) while the dough is rising.

After the dough has risen, punch it down gently to release any air bubbles. Transfer it to a greased loaf pan, and shape it into a loaf by tucking the edges under.

Cover the loaf pan again with the kitchen towel or plastic wrap, and let it rise for an additional 10 minutes.

Once the dough has risen for the second time, remove the cover and place the pan in the preheated oven. Bake for about 35-40 minutes or until the bread is golden brown and sounds hollow when tapped on the bottom.

Once baked, remove the bread from the oven and let it cool in the pan for a few minutes. Then transfer it to a wire rack to cool completely before slicing.

Enjoy your freshly baked bread!

Using generated C# code

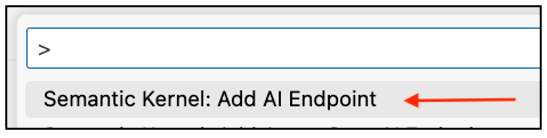

Click on “View code”.

Note the message at the top. You are asked to add a specific version of a package in your C# app.

To get started with a basic console application, click on the “Learn more” link at the bottom.

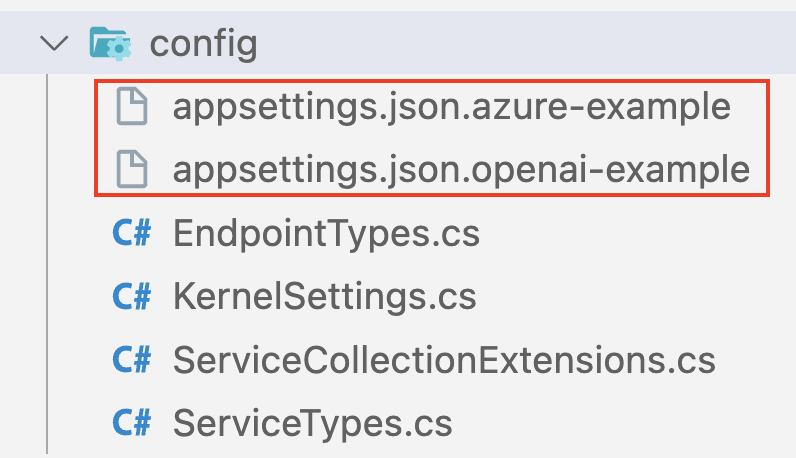

dotnet new console -n azure-openai-quickstartcd azure-openai-quickstartdotnet add package Azure.AI.OpenAI --prerelease

Run the above commands, then replace the code in Program.cs with the code that was generated by “Azure AI Studio”.

You will need to enter the AZURE_OPENAI_API_KEY at around line 8 in Program.cs. This is given to you just below the sample code in “Azure OpenAI Studio”.

If you run “dotnet build”, you will see some errors. This is because we did not use the specific preview version of the Azure.AI.OpenAI package that was suggested. Most likely you have a more recent version. The version I have at the time of writing (January 2024) is 1.0.0-beta.12. All the errors pertain to the ChatMessage property when creating ChatCompletionsOptions. Replace the code for responseWithoutStream with the following:

Response<ChatCompletions> responseWithoutStream = await client.GetChatCompletionsAsync(new ChatCompletionsOptions() {DeploymentName="gpt-35-turbo",Messages ={new ChatRequestSystemMessage(@"You are an AI assistant that helps people find information."),new ChatRequestUserMessage(@"Give me an easy recipe for baking a loaf of bread that takes about 30 minutes preparation and about 40 minutes in the oven."),},Temperature = (float)0.7,MaxTokens = 800,NucleusSamplingFactor = (float)0.95,FrequencyPenalty = 0,PresencePenalty = 0,});

Since nothing is output, let us display the AI response. Add the following code to the bottom of Program.cs:

Console.WriteLine(response.Choices[0].Message.Content);

Run the app and you will see the response from the AI. In my case I received a very similar response to what I previously got.

Conclusion

"Azure OpenAI Studio" can help you get started with the development of a C# app that utilizes services from "Azure OpenAI".